AWS Lambda, How to Tape a Bunch of Cats Together

I was lucky enough to attend an AWS Architect and Developer Day in Melbourne recently, and as you’d expect, Lambda was an underlying theme. I didn’t attend a single presentation in the developer track that wasn’t plugging Lambda in one form or another. Lambda for micro-services, Lambda for Alexa, Lambda as a database event trigger, Lambda for transactions, Lambda for Lambda. Lambda. Lambda. λ.

I ❤️ Lambda. I think it’s an excellent tool in the ever increasing bag of tricks that is AWS, but I’m a bit hesitant when Amazon talks about it as the golden hammer which is going to fix all your problems. For starters, you need some rigor around how you develop and deploy your functions, particularly in large development teams. For example, the multi-stage functionality offered by the API Gateway are great, but no enterprise customer is going to allow you to run a “DEV, TEST and PROD” environments in a single AWS account. Never going to happen. Ever. And nor should it. Separating environments (or stages) across multiple AWS accounts, allows for separation of responsibilities that large organisations love, and are generally required to have. So how should we look at using Lambda in a large company?

Developers get their own AWS credentials, in a Linked Account

To use Lambda, Developers need AWS credentials to deploy functions, hook in event triggers and explore the various managed-services that AWS has to offer. If your company is using AWS, and each one of your backend developers does not have access to an AWS account, you are doing it wrong. That’s not to say that it’s just a developer free-for all, where every man and his dog can spin up new P2 instances on a whim, but there needs to be sensible controls, and monitoring in-place to strike a balance. I’d recommend a set of IAM permissions to limit accounts initially to a subset of managed services, with an appropriate tagging system in place to identify resource ownership, and a fairly aggressive monitoring strategy to ensure costs don’t blow out, and developers aren’t spinning up bot-nets.

Python & NodeJS Only for API-Gateway Events

I’ve mentioned this in a previous post, but the performance of Java in Lambda is a non-starter if you’re wanting to use it behind the API-Gateway. Until AWS improves the performance of the cold-start mechanism for Java based Lambda functions (see here), it’s unworkably slow. You can happily use Java for non-time sensitive operations, such a Dynamo DB triggers, but just don’t use it for API Gateway functions. If you’re thinking about writing another function to keep the container warm by triggering it every 5 minutes, back away from the keyboard, go get yourself a coffee, come back, and then spend that time you were going to use writing the trigger function to rewrite your code in NodeJS or Python. You’ll be much happier, everybody will be much happier.

Lambda Function Migration

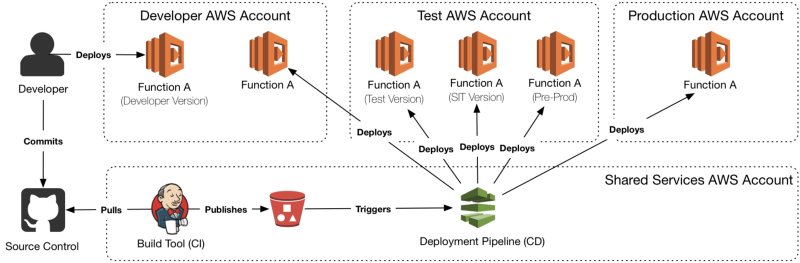

The following diagram demonstrates an approach for migrating Lambda functions between environments. There could be multiple environments in a single AWS Account, such as with the Test AWS Account, or there could be a single environment, just like production. Why the multiple account approach? It separates concerns, and ensures that breaches or problems in lower accounts have no chance of affecting production. You don’t want to be in the scenario where somebody accidentally deploys a development version of a Lambda function into your production API Gateway stage, something that can easily occur if you’re using a single AWS Account with poorly configured IAM permissions. In this model, there are multiple stage-gates to ensure only verified changes make it to production.

CI/CD can sometimes be bandied about as a single term, but it’s two distinct practices, which you may wish to use two different tools to achieve. For the CD component A lot of enterprise customers will just use a bunch of Jenkins servers together, which can work, but generally adds a lot of maintenance overhead. Other tools, like Spinnaker or AWS CodePipeline are generally a better fit. The above diagram has the pipeline tool being powered off an artefact deploy to S3, which is then deployed out to the various accounts and environments.

Lambda for MVP

The real reason to use Lambda, is that it’s fast to develop & deploy. You can quickly write a small set of functions to prove out a hypothesis , and then deploy them to run in a production-ready configuration. You’re not worrying about infrastructure, you’re not worrying about scaling, you’re focusing on you business problem at hand. You can code, deploy and analyse faster than you’ve ever been able to before, which makes it perfect for Minimum Viable Products. Every company should is interested in the flexibility.

If you’re Lambda function is being executed too many times a month, and it’s no longer economical to run in the Lambda, then that’s a great problem to have, as your product is getting used. You can then start to look at moving off Lambda, onto more flexible infrastructure. I’ve worked on projects which sit on thousands of dollars worth of infrastructure a month, but are only called a few thousand times a day, which is just not economical. You won’t be running your internet banking infrastructure on Lambda, but you might want to run your fraud complaints system.

Conclusion

As I said, I ❤️ Lambda. I jump at any opportunity I get to use it. But large companies that are still skeptical of its magical powers are missing out on the opportunities to innovate just as quickly as that startup around the corner. It doesn’t need to be as footloose and fancy-free as Amazon makes it out to be, and you can still use it in a controlled and sensible way. If you’re an IT Architect, and you’re reading this, come to the dark side. The water is lovely.